20 years of transparency in PDF

Excerpt: PDF Association CTO Peter Wyatt explains the transparent imaging model in PDF and recounts its significance since Adobe introduced this technology in 2001.

About the author: Peter Wyatt is the PDF Association’s CTO and an independent technology consultant with deep file format and parsing expertise, who is a developer and researcher actively working on PDF technologies … Read more

Contents

The end of 2021 marks the 20th anniversary since the Adobe PDF 1.4 specification introduced partial transparency into mainstream page description languages. The transparent imaging model extended “… the opaque imaging model of earlier versions [of PDF] to include the ability “…to paint objects with varying degrees of opacity, allowing previously painted objects to show through” (Adobe PDF 1.4).

This was a very significant technical change and a giant leap forward for widely adopted mainstream page description languages (PDL) as both PostScript and earlier versions of PDF only supported an opaque imaging model. Today it remains as arguably the single most significant technical change ever introduced into PDF in almost 30 years. Although PDF was not the first PDL to define and use native partial transparency, its dominance – even back in 2001 – forced the computer industry to take notice. Today, every mainstream file format and graphics application support such transparency, but it was PDF that established partial transparency as a first-class native file format feature.

The transparent imaging model introduced by Adobe in PDF 1.4 provides capabilities beyond alpha compositing. These capabilities give authors the ability to create artistic and realistic effects using a minimal number of objects while also avoiding the need to rasterize their designs when exporting to PDF. Native transparency support allows device-independent vector graphics and text with originally authored colors.

This article reflects on why the addition of native transparency into PDF 20 years ago was a game-changer for both authors and consumers of PDF. It explains the idea of full and partial transparency for a non-technical audience and introduces the basic concepts supporting this core feature in PDF. It is not a deep dive into the technicalities of optimizing transparency handling for specific vertical applications; many other resources cover those dedicated topics.

What is full and partial transparency?

Full transparency refers to the ability to make an object, or part of an object, fully invisible. Common examples of full transparency are stencil masks where a monochrome image is used to “turn off” (i.e., choose not to paint) certain pixels of an image, and clipping paths which “clip out”, or cause not to render, specific content outside the path. Both Level 1 PostScript and the earliest versions of PDF provided such capabilities. Adobe described stencil masks in PDF 1.0 (1993) as:

It is similar to using a stencil when painting or airbrushing – a stencil with one or more holes in it is placed on a page. As long as the stencil remains in place, paint only reaches the page through the holes in the stencil. After the stencil is removed, paint can again be applied anywhere on the page. More than one stencil may be used in the production of a single page, and if a second stencil is added before the first one is removed, paint only reaches the page where there are holes in both stencils.

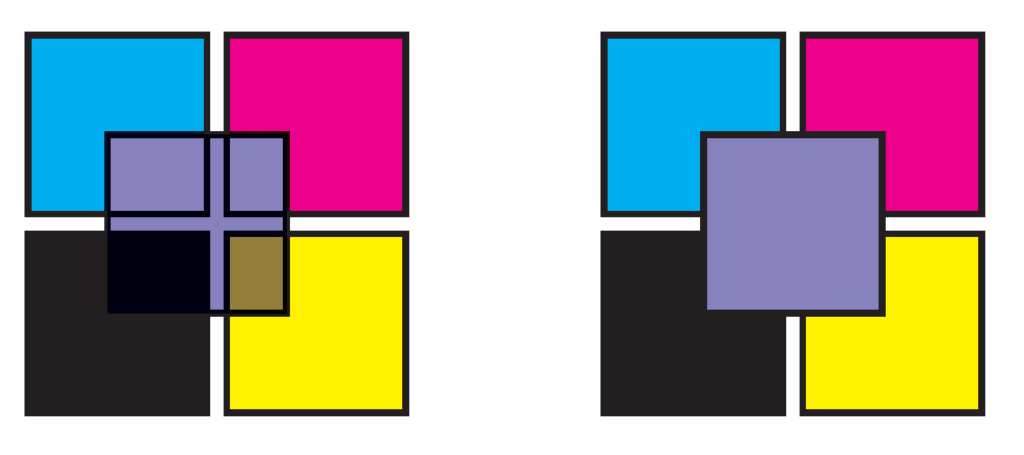

Partial transparency is far more complex than full transparency, as some proportion of both a foreground object and anything below (the background) need to be mixed together (technically known as alpha compositing or alpha blending). PDF 1.4’s support for the transparent imaging model introduced many new concepts into PDF, including constant alpha, soft masks, blend modes, matte, shape and opacity, and transparency groups. The existing PostScript/PDF opaque imaging model remained unaffected.

What does transparency enable?

Transparency enables features such as drop shadows, feathering, soft edges, blurs, glows, as well as partial “see-through” of overlapping objects. These features offer graphic designers and artists many more opportunities for creativity and flexibility than purely opaque objects. Today, conventional illustration and office suite applications provide every consumer with the ability to apply transparent effects to any object, but in 2001 such capabilities were the domain of very specialized applications. Operating systems and graphics rendering capabilities were also not as nearly as mature as they are today.

By retaining the source format’s native transparency in PDF output, files are far more device-independent, as without native transparency many graphic effects are only achievable by pre-rendering to an image. Depending on design and layout, such rendering might extend to the entire page, making for very large files and/or poor-quality text. Rasterized images also bake in many assumptions about target devices’ resolution and color capabilities – if any content needs to be tweaked, edited or re-targeted then a full roundtrip from the authoring application is required.

What came before?

With early versions of PDF, clever graphic artists and designers could achieve an appearance of partial transparency by utilizing overprinting; literally the application of ink on top of previous ink applications. However overprinting support was generally limited to professional printing workflows with very limited on-screen support in PDF viewers. Even today, not all PDF viewers support an “overprint preview”.

(left: appearance when overprinting active, right: appearance when overprinting inactive)

Some early implementations of PDF 1.4 also struggled to implement reliable, correct and performant support of the new transparent imaging model due to reliance on underlying opaque rendering technologies (e.g., PostScript interpreter). At the time, PDF 1.4 had its detractors and naysayers because of this “surprise” new and complex requirements and associated engineering burden, while others saw an opportunity for creating innovative technologies and the benefits transparency could bring to the broader PDF ecosystem. Contributing to some of the technical challenges for early implementers were errors and limitations in the original PDF 1.4 documentation that were not corrected until January 2006 when Adobe published their “PDF Blend Modes: Addendum”.

Some implementations also choose to “flatten transparency”, meaning they convert overlapping transparent objects into a multitude of (hopefully) accurately-abutting opaque objects which can often lead to undesirable artifacts, visible boundaries, or color differences where none should exist. Or they might rasterize large portions of a PDF page to pixels creating far larger files and removing any semblance of device independence.

(courtesy Ghent Workgroup’s “Transparency Best Practices”)

Today users working with PDF/X should be very familiar with the numerous test suites, test pages, and control strips devoted to ensuring reliable overprinting and transparency rendering in graphics arts workflows. This includes the European Color Initiative’s Altona Test Suite, the Ghent Working Group’s “Ghent PDF Output Suite”, and others from FOGRA, IDEAlliance, etc. These types of assets help assure both print suppliers and print buyers that a digital front end (DFE) or raster image processor (RIP) is correctly configured to accurately print a PDF file with native transparency.

Porter & Duff alpha compositing

The geometric principles of rendering with partial transparency were first described in the seminal 1984 paper Compositing Digital Images, by Thomas Porter and Tom Duff, who both worked for Lucasfilm at the time. Although the mathematics of transparency rendering (you can find the specifications in clause 11 of ISO 32000) can appear scary, the basics are relatively straightforward.

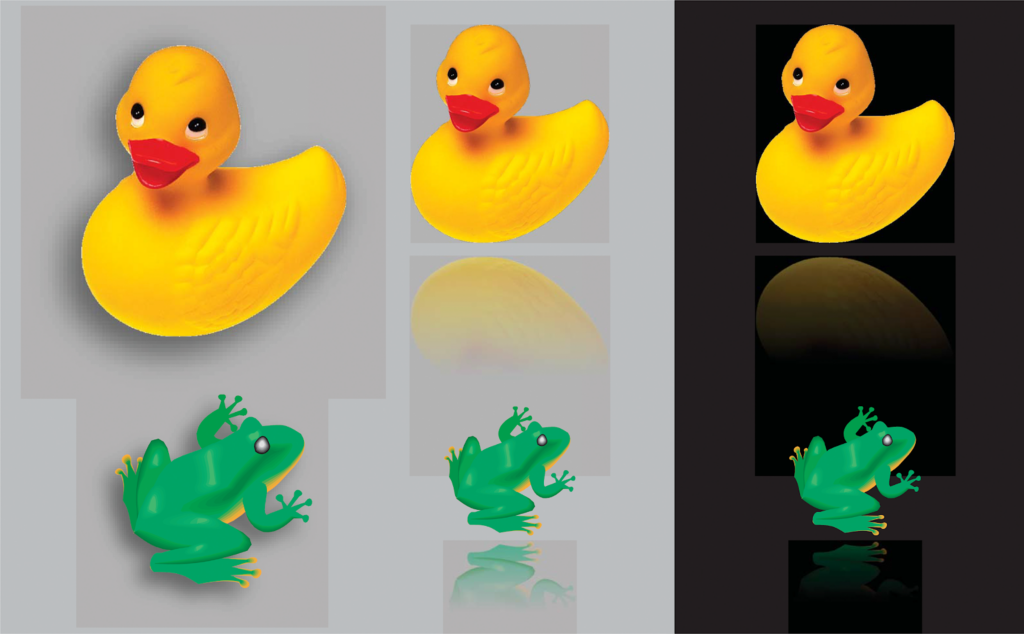

Each object or pixel has an associated alpha channel, a numerical value that represents the degree of transparency. This ranges from 0.0 (fully transparent, a.k.a. invisible) to 1.0 (fully opaque, obscuring everything below). Since graphic objects are painted in the order in which they appear in a content stream (known as Z-order), and since objects can overlap, a partially transparent object on top of other objects will show through some proportion of those underlying objects. If multiple overlapping objects all have partial transparency then multiple objects in the “stack” below will be partially visible. Note also that the level of alpha (transparency) is independent of the color of the object – any color may be partially transparent.

Although Porter & Duff defined multiple compositing operators, PDF uses the OVER operator to calculate the colour that results from blending a foreground and background object (and where the background object itself may have been the result of compositing other objects together lower in the Z-order). The OVER operator is effectively what intuition tells us will happen if, for example, we consider the result of stacking layers of partially transparent cellophane over an object.

Porter & Duff explained their model in terms of geometric sub-pixel contributions, where each pixel includes varying contributions of foreground color, foreground alpha, background color, and background alpha. I have created this simple interactive SVG model that aids in understanding how the conceptual sub-pixel contributions from both the foreground and background objects contribute to generating a final appearance.

Of course increased complexity introduces new issues; and not every early PDF transparency-aware renderer was error-free. Nonetheless, as adoption of transparency spread from specialized vertical markets such as graphic arts to mainstream office and illustration applications PDF software vendors added the necessary support.

From the interactive SVG model above, it can be appreciated that small errors in color processing or alpha calculations can lead to visible differences. Overuse of transparency effects in a PDF may impact rendering performance as more calculations may be required for each output device pixel. If you work in certain verticals (such as high-speed variable data printing), you may already have specific guidelines on the efficient use of transparency in order to maintain throughput, such as the Ghent Workgroup’s “Transparency Best Practices”. But if you are a general consumer of PDFs for business or the web, then the use of native PDF transparency is something that you will already take for granted.

More than just transparency

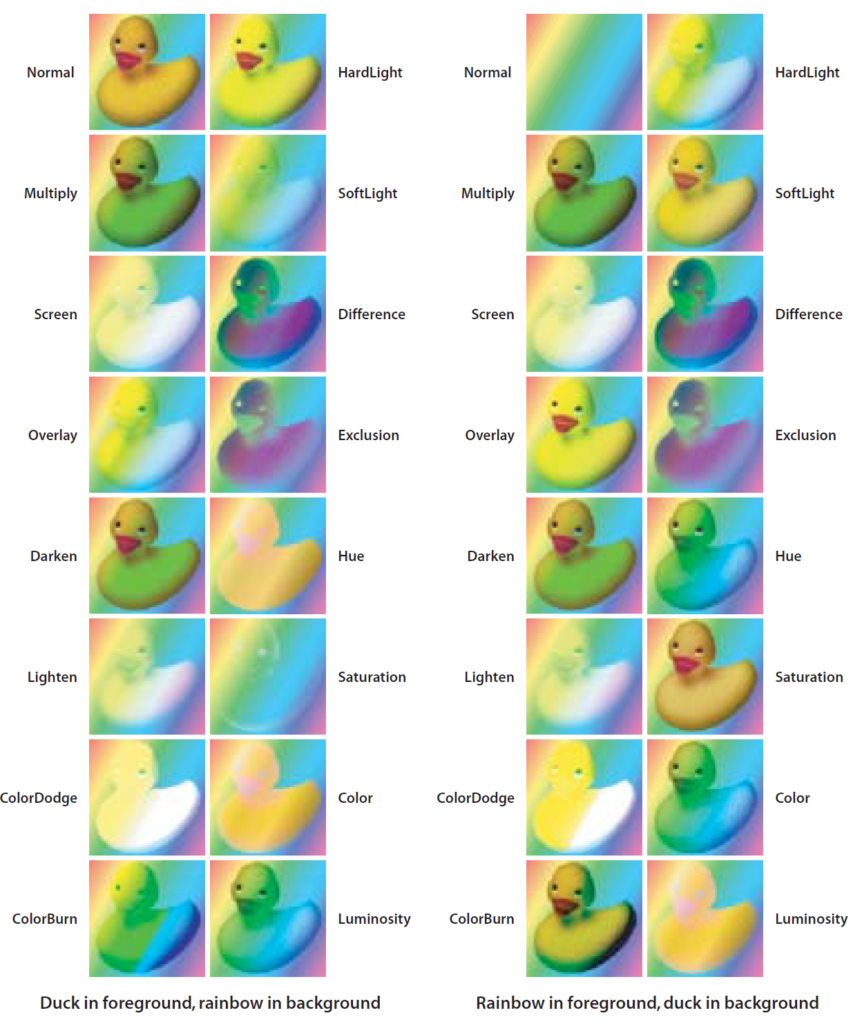

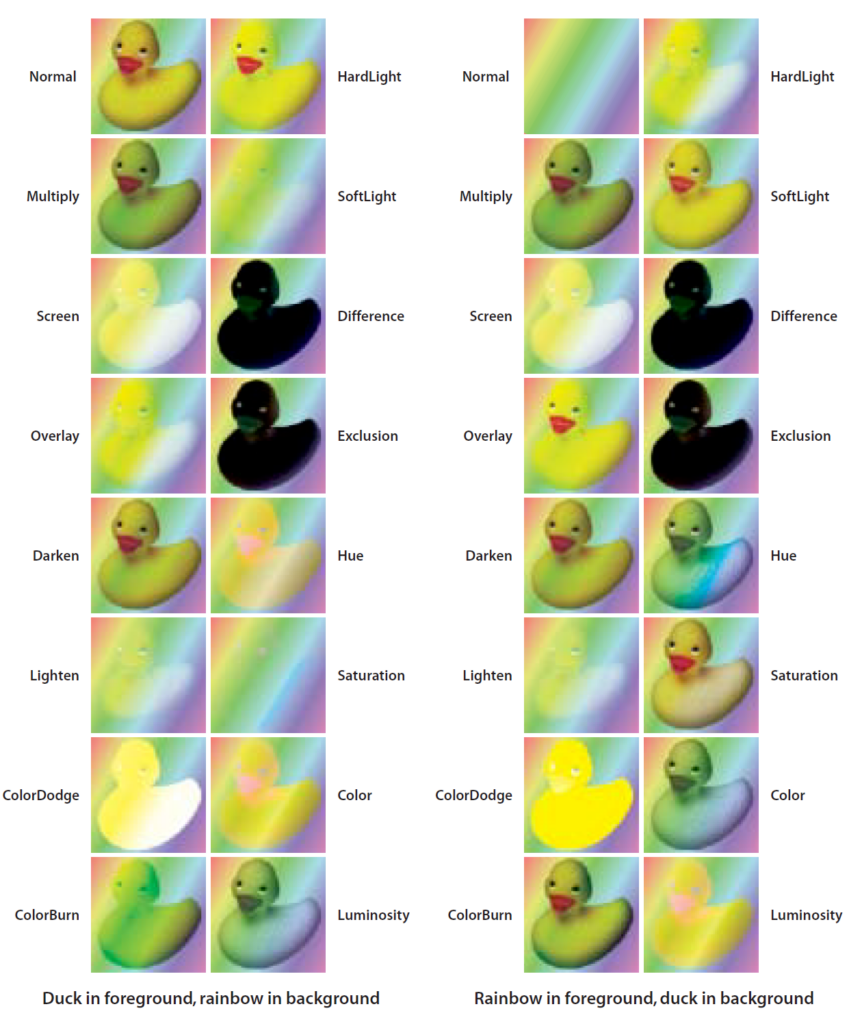

With PDF 1.4, Adobe also introduced other more advanced effects known as Blend Modes. A Blend Mode is a mathematical function (formula) that combines (blends) foreground and background colours and alphas to yield another color. Because of this, the color space in which blending occurs (known as the blending color space) can have a large impact on the final appearance.

Adobe used “rubber ducky and rainbow” colored plates in the publication of PDF 1.4 to illustrate blend modes and the effects of blend mode color space. For some combinations, the name of the blend mode becomes more apparent, however, for others, the effect can be difficult to comprehend. If you are curious about the visual effects that various PDF blend modes can achieve this simple interactive demonstration may help.

Recent changes introduced in PDF 2.0 (see ISO 32000-2:2020) include transparency and blend mode attributes for annotations, allowing annotation appearances to benefit from advanced blending with the underlying document. In addition, some early ideas have been deprecated in PDF 2.0, such as the special blend mode name Compatible and the array of blend modes specified for the BM key in graphics state parameter dictionaries, as these were not adopted by industry.

Native transparency and blending are among the most complex of imaging technologies, so support has taken time to mature. Of course, the Adobe suite of applications provided support at the time, but only much later would other file formats and platforms expand their core capabilities to match those specified for PDF. SVG was much later in adopting the same set of advanced compositing blend modes originally defined in PDF 1.4, but also included a larger set of the Porter & Duff compositing operators and a more advanced filter model. At about the same time, CSS was also introducing the same PDF blend mode support indicating the acceptance for advanced transparency. Even more recently, Apple’s Swift UI framework for screen display defined the same set of blend modes as are defined for PDF. It is also interesting to note that the vast majority of the references on the Wikipedia page for blend modes are dated roughly a decade after PDF 1.4 was first released! Adobe undeniably led the way here.

Conclusion

Today, every quality PDF viewer and renderer supports all native transparency features as they are widely generated and are a core part of the portable document experience that PDF provides.

Today, authoring partial transparency effects is easy, and native support across all devices means that we are used to seeing partial transparency effects in everyday advertising, across the web, and in many documents with overlapping graphics, drop shadows, and irregular-shaped images. 20 years on we can look back to note that Adobe’s bold move to “break” PDF in 2001 by introducing a radical technical change played a major role in kick-starting modern consumers’ expectations regarding transparency.